Briefing Room

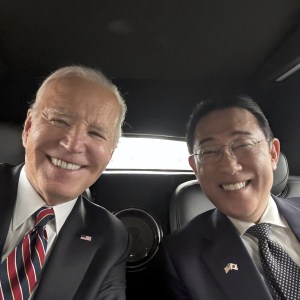

Remarks by President Biden and Prime Minister Kishida Fumio of Japan at Arrival Ceremony

Background Press Call Previewing the Bilateral Meeting of President Biden and President Marcos of the Philippines and the Trilateral Leaders’ Summit

Remarks by President Biden on Rebuilding the Francis Scott Key Bridge and Reopening the Port of Baltimore | Baltimore, MD

Statement from President Joe Biden on Arizona Supreme Court Decision to Uphold Abortion Ban from 1864

Statement from President Joe Biden on CHIPS and Science Act Preliminary Agreement with TSMC

Statement from President Joe Biden on the 30th Commemoration of the Genocide in Rwanda

FACT SHEET: The Biden-Harris Administration Highlights Recent Successes in Improving Customer Service and Delivery for Safety Net Benefits; Identifies Opportunities for States for Further Improvement

FACT SHEET: Biden-Harris Administration Announces New Action to Implement Bipartisan Safer Communities Act, Expanding Firearm Background Checks to Fight Gun Crime

Statement from President Joe Biden on the March Consumer Price Index

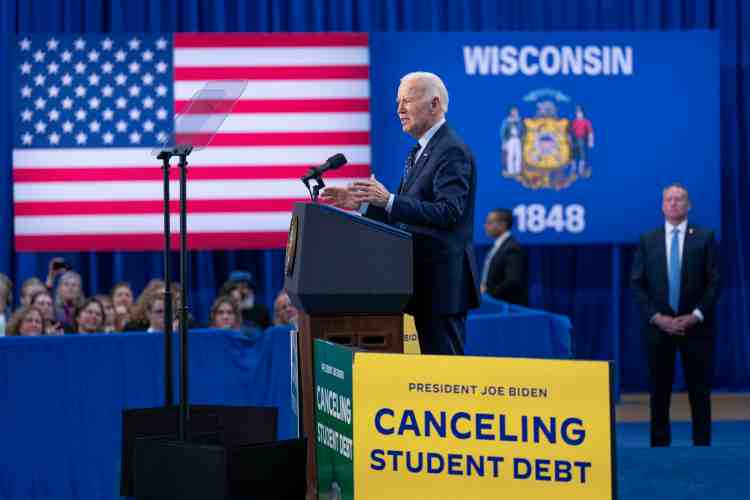

Featured Media

Great to have you back in the States, Mr. Prime Minister.

Investing in the

American

People

From rebuilding our infrastructure to lowering costs for families, President Biden’s economic agenda is investing in America.

We Want to Hear From You

Send a text message to President Biden, or contact the White House.

Message and data rates may apply.

Reply HELP for help or STOP to cancel.

The

White House

Learn more about the current administration, our country’s former presidents, and the official residence.